I. Mr. King’s Fall

If you live in Canada it would have been hard over the last few weeks to miss the reports about the fall of one Thomas King. He has fallen, at least in the eyes of some (and no one knows who or how many) because it appears he is not ‘indigenous’, as most people, including Mr. King – maybe – used to think.

This has led to a flurry of articles and opinion pieces in the Globe and Mail, including one written by Mr. King himself, and while there are some good reasons to see it as a tempest in a teacup, I have found it fascinating. It is a sterling example of much of what is wrong with North America in the 21st century, so I am going to write about it.

A lot.

This won’t be short, so buckle up.

And, it’s going to get complicated, but I will start with this. According to the Globe and Mail, “Douglas Sanderson (Amo Binashii) is the Prichard Wilson Chair in Law and Public Policy at the University of Toronto’s faculty of law.”

Yea….so?

Well, Mr. Sanderson (or Mr. Binashii) is the one from whom I learned that word ‘pretendian’, in an essay he wrote in the Globe, titled ‘Indigenous’ is the new ‘Oriental’- and that opens the door to ‘Pretendians’.

What does that have to do with Mr. King? Let’s back up a bit –

Thomas King is a Canadian-American (dual citizen, lives in California) author, ‘presenter’ and ‘activist’ who has most often written about First Nations. For much of his career there was a general belief that he was ‘part’ Cherokee, but the big story this last month is that he has accepted genealogical evidence – whatever that is – that he is not. He is, therefore, one of Mr. Sanderson’s ‘pretendians’, along with others like Buffy St. Marie. At least I think Mr. Sanderson would say that, as his essay is anything but clear. It seems he does not like the term ‘indigenous’, although he admits in the article that it came along to replace ‘aboriginal’, which at least some folks did not like either. Sanderson says that dislike of ‘aboriginal’ was due to a grammatical misunderstanding, and his gripe with ‘indigenous’ seems (to me) to be that it is vague, unlike ‘Metis’ or ‘Anishinaabe’, and Mr. Sanderson thinks this is what opens the door to pretendians. That seems just silly to me. King thought/claimed he was ‘Cherokee’, which seems pretty definite. People who claim or think they are ‘indigenous’ always claim or think they are part of some particular tribe or group, so far as I can see. For example, Buffy-not-the-vampire-slayer claimed she was born a Cree. Not true, it turned out, but that was her claim.

I no longer expect clarity from law professors, and his article is another example of why. But it is not really his article that got me to write this one, beyond the fact that I kinda like his term ‘pretendian’. (If he did not coin it, he does not cite anyone else as doing so.)

That all being said, it’s time to get to the heart of the matter, part I – Mr King’s fall from grace.

Back on Nov 26, after the King story broke, the Globe published an Opinion column by Tanya Talaga titled ‘Thomas King’s storytelling now feels like a betrayal’. [Globe: Tanya Talaga is an Anishinaabe journalist and speaker. Talaga’s mother’s family is from Fort William First Nation and her father was Polish-Canadian.]

Full disclosure now, King has written novels which I have not read, but he also wrote a book called The Inconvenient Indian which gave his view on indigenous/aboriginal – settler relations in North America over the years, and I did read that. I think it was for a book club I was in at the time, and to my admitted surprise, I rather enjoyed it. It was less strident than most ‘grievance’ books, made any number of sensible points, and was clearly and – at times – humorously written. I learned from it, and that is always a good thing, so thank you Mr. King.

I do not feel as though the things I learned are somehow wrong or no longer valuable now that King has decided/revealed he is not really Cherokee. So, I read Talaga’s piece to try and understand why she feels ‘betrayed’.

Talaga writes early in her piece that:

Thomas King is an author I revered, right up there along with Lee Maracle and Murray Sinclair. Mr. King and the late Ms. Maracle were close friends; she spoke of him often. I met him at the Eden Mills writers’ festival, and told him I admired his work.

Ok, so she ‘revered’ King, and I will start with that. Maybe you should not ‘revere’ people you don’t know well. My favourite Canadian author is Robertson Davies. If only I could write like that man. And, to be honest, there have been days when I really really wished I was Keith Richards. That guy is amazing, partly just for still being alive, but also because he wrote so much of what I think of as the best rock riffs ever recorded. I even bought his autobiography, and I never buy those.

But still, I know that ol’ Keef is just a bloke, and he did a lot of bad things, as have we all. I could never say I revere him. Perspective, man.

Reverence aside, here is a pretty clear statement of the source of Talaga’s distress, I think:

I found it unbelievable that the person who wrote The Truth About Stories in 2003, a book I leaned on for its brilliant tear-down of the myth of the “noble savage,” was not, in fact, Cherokee.

Maybe this tells us something useful, at least about Ms Talaga, and perhaps about others. If you thought Mr. King did a brilliant job of tearing down that myth, what does his – for lack of a better term – ethnic heritage have to do with that? If it was brilliant, the way I find Robertson Davies’ writing brilliant, what difference does it make that Davies was a (as far as I can tell) Scottish-Canadian Anglican?

Part of an answer perhaps comes from this quote from Talaga’s article:

Until Mr. King can prove that he is Indigenous, or a member of another First Nations community via his paternal side, his work cannot be understood as being told from the perspective of an Indian, but of an ally, and it must be read through that lens. His books should not be added to courses on Indigenous literature and history; unless he proves otherwise, his work must move to the Canadian literature section.

So, it is about borders. Boundaries. Fences, and who is inside them and who is outside. His work must indeed be ‘understood’ differently (whateverthehell that means) if he is not a member of some ‘First Nation’.

Ms Talaga feels betrayed because she thought King was inside the fence, and it turns out he is not. I searched my brain for an analogy, and here is the best I can do.

Imagine you have had a family doctor for 15 years who you went to with health problems, and who you found at least mostly helpful. One day you learn he does not actually have a medical license, he has been practicing without one. Now he is likely going to go to jail, but the analogy here is about the question: how would you feel? Betrayed? You’re still alive, nothing he did killed you or harmed you irreparably. Yet, I can easily see that in that position a person would be angry, and maybe feel that the fake doc had betrayed their trust.

The difference would seem to me to be that a medical license implies the guy knew things that enabled him to help you. You thought he was trained, yet he was not. His lack of training meant he might have hurt you. Being part Cherokee does not imply any special training, but I suspect that Ms Talaga, and others, would not see it that way. They would assert that only someone who is actually ‘Indigenous’ can possibly credibly tear down the myth of the noble savage. I disagree, I don’t see how any ethnic heritage makes anyone more or less able to do anything.

Another quote from Ms Talaga:

The searing harm of Mr. King’s story, as it stands, is that it plants doubt into the many sisters and brothers out there who want to find us and come back home. It leaves other truly Indigenous authors overlooked. It crowds out our own truthful stories from education curricula, from the zeitgeist, from Canadian arts spaces.

I have no idea what that first sentence is trying to say. Doubt about what? Doubt that some people who say they are indigenous really are? Ok, sure, but such doubt would include everyone, including me. What could the reference to those ‘brothers and sisters’ possibly mean?

I don’t know, but the rest of the quote is to me about more border policing. King’s unwarranted claim to be indigenous meant that his work kept other work from the ‘truly Indigenous’ from being recognized – and getting on reading lists and such.

Before I move on to Part II, which my old employer was kind enough to provide material for, I close with one last quote from Talaga. It is the last line from her article:

Now we all have to find healing, again.

Oh fergodsakes, Tanya. ‘we all’? Get over yourself. It does not occur to some people, and Talaga is hardly unique in this, that not everyone shares their perspective. I am willing to assert that Canada and the US are full of people who see themselves as indigenous or Cherokee or Metis or whathaveyou, but who feel no need to heal again as a result of Mr. King’s newly revealed ethnic status. They have known all along that there are ‘pretendians’ around, this is just one more, and they will get along just fine from here on out despite that. No extra healing required.

II. Policing the Borders of Material Advantage

As I mentioned above, one of the many things to appear on the Globe website about the King story was an Opinion piece by King himself, in which he sort of tries to explain himself. Ms Talaga says in her article:

Mr. King appears to be another person who truly believed they were First Nations – and profited from that identity – but does not have living blood ties to a family, to a community, to anyone on or around the rez.

So, she is giving him the benefit of the doubt, believing that he truly believed, at least for awhile, that he was ‘indigenous’. Reading King’s G&M piece, I am less sure of that. It reads to me like the confession of someone who for a very long time had doubts about his own indigeneity, but chose not to look too hard at it, until an organization called TAAF (The Tribal Alliance Against Frauds) did some digging. [In one of those ironies with which the world is replete, TAAF is also how The Asian American Foundation refers to itself.]

You can check out the first TAAF here – https://tribalallianceagainstfrauds.org/

And here is the thing. If you are accepted as being ‘indigenous’, there are material advantages that accrue to you in the 21st century. Part of the reason for this is the existence of people like Tanya Talaga who might not read your books about anything related to indigenous peoples if you are not inside the fence.

But it runs much deeper than individual attitudes, a fact which my former employer is making clear these days.

I got another email from the powers at UWO a couple of weeks back, telling me of a draft of a new policy and related procedures they are working on. I know – yawn.

However, this one deals with people who claim to be ‘indigenous’. This came out just before the King thing surfaced, and has been in process for awhile, so it was not a reaction to The King Revelation. There have been plenty of previous ‘pretendian’ cases, as I said, and I suspect that includes some at UWO – which the powers would do everything to keep quiet, of course.

Anyway, here is a direct quote from the draft set of procedures I was sent:

Consistent with the concept of Indigenous self-determination, this Procedure is initiated once a member or prospective member of the University community who is subject to the Policy makes a declaration of Indigenous citizenship or membership that will result in a new or renewed material advantage to them at Western or is otherwise subject to the Policy.

This puts the crux of the matter in amazingly clear language for a bureaucratic document – ‘new or renewed material advantage’ indeed.

What might those be, you ask? Well there are some obvious ones. First, you will not get a faculty appointment in UWO’s Indigenous Studies department if you are not ‘indigenous’, but you can be sure that your application for a faculty appointment in any department will be enhanced by such status. Applying for a scholarship or post-doc position? Applying for a research grant? Appealing your mark in Econ 2251? The list goes on and on, and in every circumstance your chances of success will be enhanced by your indigenous status.

The procedures in the doc I was sent are all about how the U decides whether someone is inside the ‘indigenous’ fence, and thereby entitled to the relevant material advantage. The reason for the policy and related procedures are self-evident. If someone get such an advantage and then turns out to be a ‘pretendian’ it is very embarrassing for UWO in general and for those who conferred said advantage in particular. Just imagine if Buffy St Marie had been appointed as an ‘indigenous’ faculty member in the Faculty of Music, eh?

So, those borders must be policed.

And, the details of the policing are fascinating in itself.

If you want the material advantages, you have to say so (the appropriate form you must submit is attached to the document I was sent as Appendix 2: Declaration of Indigenous Citizenship or Membership) and then a committee will be struck to determine whether or not to affirm your claim to ‘indigeneity’.

How will this affirmation decision be made? Like this:

“The Vice-Provost & Associate Vice-President (Indigenous Initiatives) (VP/AVP II) will:”

[Aside: I love the initials. VP/AVP II. Do you suppose their admin assistant is R2D2?]

a) form an Indigenous Affirmation Advisory Committee (IAAC) on an as needed, ad hoc basis in accordance with this Procedure to verify all declarations of Indigenous citizenship or membership that are subject to this Procedure;

b) the IAAC will be comprised of a Chair and two (2) other local Indigenous community members from any of the local First Nation communities upon which the University is situated.

The procedure specifies that this IAAC Chair must be ‘an affirmed Indigenous faculty member, staff, librarian or archivist employee of the University’.

The document goes on to say that in doing its work, the IAAC will:

“provide trigger warnings leading up to any discussion where Indigenous citizenship or membership may be raised in the process of recruiting/ identifying an Indigenous-designated position(s), award, etc.; “

Also….

“share relevant and accessible resources, as listed in Appendix 1, with all individuals seeking affirmation as they may find the discussion around Indigenous citizenship or membership to be triggering;”

I won’t belabour you with all of Appendix I, save to say it lists a whole lot of ‘mental health resources’, including The First Nations and Inuit Hope for Wellness Help Line: 1-855-242-3310 or https://www.hopeforwellness.ca

….and so on.

I sat on both sides of many tenure-review committees during my career. People who failed these reviews got, well, fired. No one was told about such resources. Barbaric, I know.

Naturally, the IAAC is going to want to see some kind of documentation to support the claim of indigeneity. Here’s what the procedures doc says about that:

Upon direction of the University, anyone who makes a declaration of Indigenous citizenship or membership that is subject to the Policy will be required to provide the IAAC with the following current documentation for review and have affirmed:

(a) a statement of relational positionality1 (up to 4 pages) that includes details of:

(i) the individual’s personal lived experience in relation to their declaration of Indigenous citizenship or membership;

(ii) the individual’s personal connections, responsibilities, and interrelationships with their Indigenous Nation, Clan, language, culture, community, land, and other relevant responsibilities including details of the individual’s lineal descent; and;

(iii) where relevant, details about how colonial practices (e.g., the ‘Sixties Scoop’, Residential Schools) have impacted how the individual is able to claim Indigenous citizenship or membership as a person with lived experience.

There is a (b) also, which states that the applicant must present two out of a list of 15 possible documents. It also goes on to say that the IAAC may, at its discretion, require even more than this. It also says that if the applicant cannot come up with two of the 15 listed documents, a sworn affidavit from the applicant and two community reference letters might do the trick.

This might, to the unenlightened, seem like it makes gaining the relevant material advantages rather easy. Any 2 of 15 docs will do, and if you cannot come up with those just get two community members to swear an affidavit. Seems a pretty low bar.

Of course, anyone who has worked in an LBO knows that the primary purpose of procedures like this is to cover the LBO’s ass. If a ‘pretendian’ should be revealed at any point, the U’s top bureaucrats can say, with their hands over their non-existent hearts – ‘Hey, we had a thorough procedure and we followed it. Not our fault this asshole found a way around it.’

The procedures doc goes on to say how the appeal process works if indigenous membership is ‘not affirmed’ (‘denied’ is such a harsh word) by the IAAC. Guess what – a new committee is formed to consider the appeal.

And, one should not lose sight of the fact that all of this will involve tons of time by the six-figure-salaried VP/AVP II, and no end of other bureaucrats and faculty. For every case.

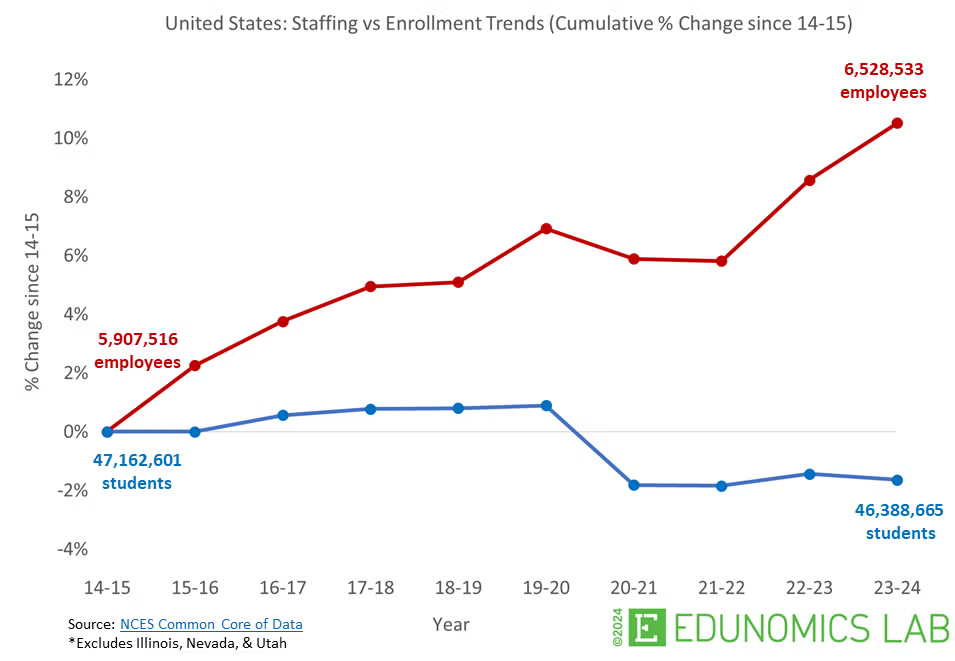

Why oh why has the number of bureaucrats in universities risen so much more than the number of students or faculty over the last 20 years?

But hey, those borders must be policed, right?

or……….

or……….