No Longer Merrye Olde England

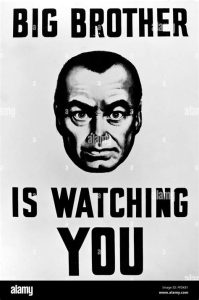

If you grew up in North America over the last 150 years, you are likely to see England as the source of much of what we view as liberal democracy. The Magna Carta, a representative Parliament, the idea that a man’s home is his castle, all that which says that it is the citizen who has rights, and the government exists to serve the interests of said citizens.

The UK today hardly lives up to that image. Here is a complete story recently published on the website for Reason Magazine. It’s brief, but the link in the story takes you to a MSN article which is purported to be from The Telegraph, a UK newspaper. The actual story in the Telegraph is behind a paywall, but it does exist, dated March 2025.

In England, the Hertfordshire Constabulary will pay Maxie Allen and Rosalind Levine around $26,000 after wrongly arresting them for complaining about their daughter’s school in a private WhatsApp group. Six police officers arrested the couple at their home and detained them for 11 hours on suspicion of harassment and malicious communications. Their “offense” was questioning the school’s head teacher recruitment process. The school reported the parents to the police, claiming their messages upset staff. After a five-week investigation, police found insufficient evidence and took no further action.

If the MSN article is accurate, then Reason is understating things a bit. The MSN piece notes that:

The couple were arrested after the primary school complained about Mr Allen and his partner sending numerous emails and making “disparaging” comments on a parents’ WhatsApp group.

The couple had been banned from entering the school after questioning the appointment process for a head teacher and “casting aspersions” on the chairman of governors on WhatsApp.

So, they were not just talking to other parents, they did send emails to the school itself, and one could imagine such emails being threatening. However, then we have this from the same article:

Mr Allen said that the worst example they have since been able to find was Ms Levine describing a senior school figure as a “control freak”. “That was the strongest remark we could find – no obscene language, no threats,” he said.

And, it seems fitting to include the last sentence of the article, which is a quote from a ‘police force spokesman’ –

“Therefore, Mr Haddow-Allen and Ms Levine were wrongfully arrested and detained in January 2025. It would be inappropriate to make further comment at this stage.”

Yea, inappropriate – just pay the money and shut up.

One last quote:

Having questioned the couple on suspicion of harassment, malicious communications and causing a nuisance on school property, Hertfordshire Constabulary ruled that no further action should be taken after a five-week investigation.

So this was an investigation into, among other charges, something called ‘malicious communication’. What the freak can that mean? ‘Fraudulent communication’ I could see being a crime, particularly if it is part of an attempt to separate someone from some of their possessions or cash. Is calling someone a ‘control freak’ enough to hit the bar for ‘malicious’? Apparently not, but then I’d like to know what is.

The two things I find most appalling about this:

- The school bringing in the police. This is up there with the Biden Attorney general wanting to look into parents complaining to and about their school boards as examples of ‘domestic terrorism’. Government bodies are finding more and more ways to avoid being questioned about anything they do, and this is another example of that very disturbing trend. Parents should not be objecting to the way a head teacher is selected, apparently. I can well imagine that the couple in this story were considered pains in the ass by the school, and perhaps deservedly so. That does not justify calling the police.

- The police sending 6 officers to arrest and detain this couple. This is also another example of a 21st century trend: police use of ‘massive force’ in any and all situations. I presume the idea is to protect the police, but six officers to arrest a couple for ‘malicious communication’?

I suppose the fact that the police ‘after a five week investigation’ cut a cheque to the couple for a not-inconsiderable amount can be taken as some sort of good news. It is certainly an admission that what happened was wrong, even in the UK. But, coupled with other stories coming from England, such as the comedian being arrested after getting off a plane in England for comments he made online that were critical of UK immigration policies, I find it hard to think of England as a place where citizen liberty is protected. It seems more like a country which is in the vanguard of protecting government officials from criticism.