Neo-Toddlerism and AI

I’ve had a bug for about a week, and because I am a wuss when unwell, this has dented my productivity. Which is a fancy way of saying that I have not gotten much accomplished while feeling ill, including on this blog. As partial make-up while my health is on the upswing, I point you at a blog I like a lot, and which seems to be freely available, called The Prism. It is written by someone who calls themselves Gurwinder – I assume that is a family name, I can find no other identification of him on the site. This is what he writes about the purpose of his blog:

“…this blog is my attempt to describe the myriad ways in which technology and psychology conspire to fool us, and to explore how we can withstand the covert assault on our senses.”

That is a purpose I am happy to sign up for.

I’ve read and learned from many of his posts, but one in particular I will say a bit about here is titled The Rise of Neotoddlerism: Why activists are behaving like infants, which he posted in August of 2024.

You can read it yourself here, so I won’t go on at length about it, but it dovetails in an interesting way with something else I came upon, so I will write a bit about that.

The general point made in the Prism post above is that activists of all stripes, right, left, whatever, now engage in activities that resemble the behaviour of toddlers. They block streets, throw paint on paintings and statues, break into church services and lectures and shout down speakers, etc. etc. Simply put, they throw toddler-tantrums.

They do this because it is much easier than actually trying to solve difficult problems, and because it gets themselves and their cause plenty of attention from the media. That includes legacy media as well as the newer phenomenon of online/social media. It is the growth of that second thing since 2009 that caused neo-toddlerism to emerge as a preferred strategy for these people. That’s a rough outline of his argument; the post itself is long and full of examples, and as I say, you can read it yourself if you want to learn more.

The post is a bit dated, and one thing he does not investigate is the role of AI/LLMs in this ‘neo-toddler’ syndrome. One might imagine that this new technology makes it less costly to spread news about such behaviour on the internet, at minimum. One certainly reads reports – how accurate they are I cannot say – that AI has been used to flood the internet with misinformation of various kinds. So, one can imagine a cycle in which some neo-toddlers do something tantrum-like for their cause, and then their allies use AI to spread and amplify and, if necessary, distort what was done.

That’s not a happy prospect, in my view, but here is the other thing I came across recently that might begin to tell us something about this possible cycle, and, just maybe, offer some hope that things are not so bad as all that.

I was led to an article called More Articles are Now Created by AI Than by Humans on a website called Five Percent. Again, you can click on the title and read it yourself, it’s pretty involved, but here is what it is about. It describes the results of a project to determine how much of the written content now available on the great www is written by AI. The title of the piece gives the answer they came to, but one first has to appreciate what a major undertaking it is to try to answer that question. I am writing about it only because after working through everything on this site, I think these folks did a very careful job trying to accurately answer the question.

First, their ‘Key Takeaways’ from the project are worth listing in detail:

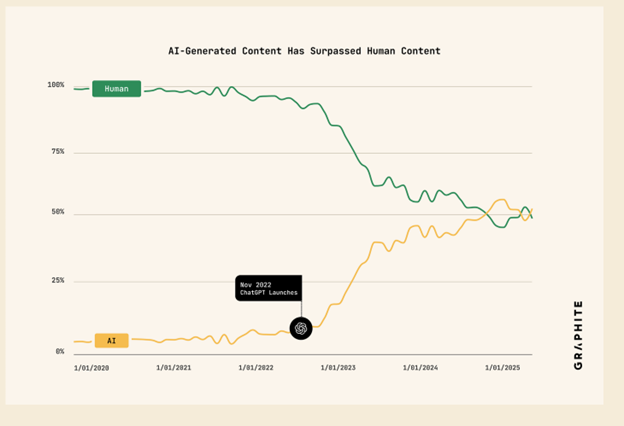

- The quantity of AI-generated articles has surpassed the quantity of human-written articles being published on the web.

- However, the proportion of AI-generated articles has plateaued since May 2024.

- Despite the prevalence of AI-generated articles on the web, we show in a separate study that these articles largely do not appear in Google and ChatGPT. We do not evaluate whether AI-generated articles are viewed in proportion by real users, but we suspect that they are not.

- Our study did not evaluate the prevalence of AI-generated / human-edited articles, and they may be even more prevalent.

The graphic below from the article does a good job of illustrating the first two of those takeaways:

I will come back to these, but my own key takeaway is that these researchers took seriously the difficulties inherent in their enterprise. Here are just some of those difficulties:

- The number of www sites is so large that there is no hope of checking them all. Thus one must check a sample of them, which means that one must work very hard to make that sample representative of the entire www.

- One must have a reliable way of determining when an article is AI-generated, something which is recognized to be difficult.

- Given difficulty 2, one needs to reliably test whatever tool is used to detect AI-generated articles for its rates of ‘false positives’ and ‘false negatives’.

They acknowledge all this, explain in detail how they dealt with each item, the limitations inherent in what they do find. I was impressed.

As to what they do find in their Key Takeaways, my readers will not be surprised to hear me say that knowing that there is more AI-written than human-written stuff on the web now does not make me happy. However, all is not bad news.

First, it has plateaued. Maybe that is temporary, and it’s not at all clear why it has happened, but it is something.

Better yet, the authors third finding suggests something else. They note that Google and ChatGPT do not often reference the AI-generated stuff. Interesting, to me, if it means that no one is much reading the AI stuff. But I would want to push this farther. I don’t read random stuff on the web. Yes, I write about my ‘wandering the web’, but what that consists of is my being on some site I find useful and trustworthy, and having that site refer me to another site, which turns out to be interesting. Certainly I sometimes find myself looking at a site that I pretty quickly see is garbage, and so I move on.

If that is what most people do, then there is some hope that the web still has reliable gatekeepers, even if that is not how they see themselves. I start from sites like the WSJ and Gelman’s Stats blog and TFP and such, and for all my bitching about those sites (which they deserve) they do not send me off to read AI slop.

If the WSJ et al ever starts doing that, then we are doomed, I suppose, but so far that is not the case. And, for it to not do so, it must be true that the WSJ’s people know AI slop when they see it, and hence don’t send their readers to it. A sort of web of ‘reliable sources’ is what needs to exist. I am hopeful that is still the case, even on the Wild West Web as it exists today.

My remaining concern is that people who spend a lot of time on Social Media – X, Bluesky, Instagram, TikTok, etc – are rather more likely to be sent off to AI slop, because those platforms allow other usesr to send you to other sites. And, I confess to being surprised at the project finding that ChatGPT does not refer people to AI-generated content. I wonder why, and how long it will last.

Not being a SM or AI user myself, I don’t really know.

Final note – this same wandering took me to a paper titled ‘Quantifying large language model usage in scientific papers’ published in Nature: Human Behavior. The abstract suggests the authors tried to find out how much LLMs like Claude are being used to write academic research papers. I want to follow up on that, but it will require me to use my super-power of University Library access to journals with paywalls, so it will take some time – stay tuned.

In the meantime, I do recommend Gurwinder’s The Prism for thoughtful writing. I go back regularly.